Has the shift from paper to online testing led to lower scores on standardized tests? Researchers Ben Backes and James Cowan of the American Institutes for Research explored the question by examining two years of results (2015 and 2016) from the PARCC assessment in Massachusetts, where some districts delivered the tests online and others stuck with paper. We sat down with Backes to discuss his somewhat surprising findings.

This is an interesting study looking at the difference between taking tests online and on paper. Did you find the pen is actually mightier than the keyboard?

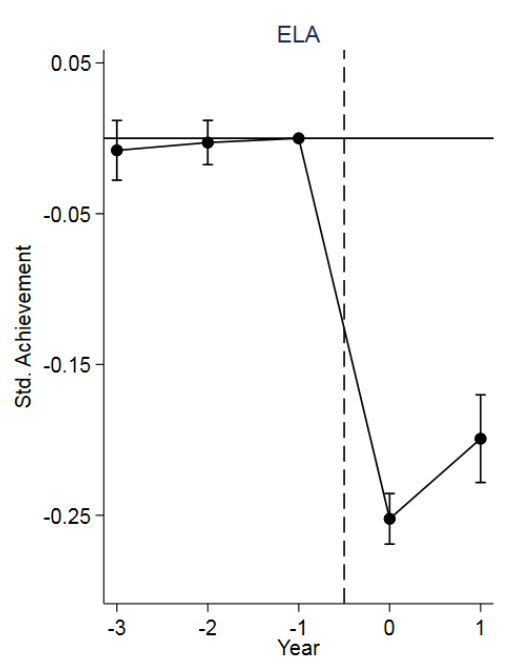

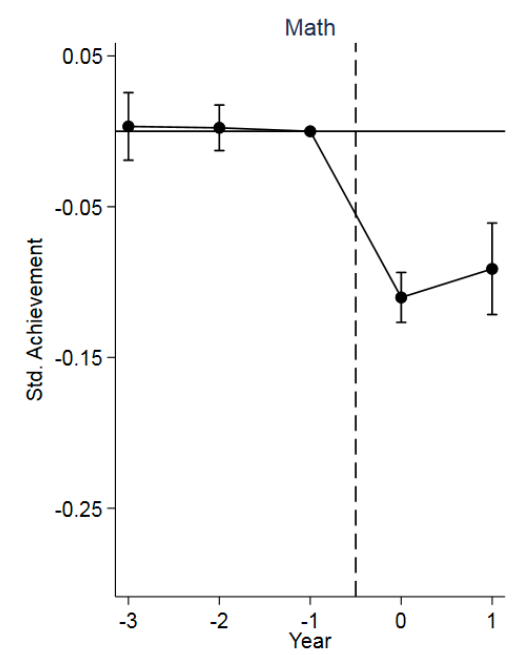

At least in the time period that we studied, there is pretty compelling evidence that for two students who  are otherwise similar, if one took the test on paper and one took the test on a computer, then the student taking the test on paper would score higher. And that’s controlling for everything we can control for, whether it’s the school that a student is in, or their previous history, or demographic information. It looks like there is pretty meaningful differences in how well students score across test modes. We found mode effects of about 0.10 standard deviations in math and 0.25 standard deviations in English Language Arts. That amounts to up to 5.4 months of learning in math and 11 months of learning in ELA in a single year.

are otherwise similar, if one took the test on paper and one took the test on a computer, then the student taking the test on paper would score higher. And that’s controlling for everything we can control for, whether it’s the school that a student is in, or their previous history, or demographic information. It looks like there is pretty meaningful differences in how well students score across test modes. We found mode effects of about 0.10 standard deviations in math and 0.25 standard deviations in English Language Arts. That amounts to up to 5.4 months of learning in math and 11 months of learning in ELA in a single year.

Could it be that higher-performing districts are choosing paper and the lower-performing are choosing computers?

What’s interesting is that there is a difference in the prior achievement of the districts that chose paper versus online, but it’s probably perhaps not what you would expect. At least in Massachusetts, it was districts that switched to online testing that had the higher prior achievement. Back when everyone was taking tests on paper, these districts scored consistently higher year after year. Then once those districts switched to online testing, their achievement fell and it was closer to what the lower-performing districts were experiencing.

expect. At least in Massachusetts, it was districts that switched to online testing that had the higher prior achievement. Back when everyone was taking tests on paper, these districts scored consistently higher year after year. Then once those districts switched to online testing, their achievement fell and it was closer to what the lower-performing districts were experiencing.

And the questions are the same online and on paper, right?

There are some differences, for example, in the format of the reading passages. Everyone knows what a paper test is like. You have this booklet, you can flip through it. You can refer to the passage, back and forth. But if you’re taking the test online, there’s this screen and a scrollbar and you have to manipulate the scrollbar. And it might not be as easy to flip between passages or refer to the passage when you’re reading the questions. So that’s an example of the exact same passage and the exact same questions, but it might be a little bit more difficult to access online.

Why do you think there’s that difference between paper and online?

It’s hard to know the exact reason. One potential reason is what I just mentioned, which is the difference in item formats. Another possibility is that students were just not used to the tests and if that were the case, then we would expect that there’d be a pretty big difference in the first year and then, over time, we would expect these differences to fade out as students or schools became more used to it.

And you found that, to some extent.

Yes, between year one and year two, we do see evidence of meaningful fadeout in test-mode effects. But even in the second year, mode effects still exist. So the question is, what would happen if we had more than two years of data? If we were able to look three or four years down the road? And in this paper, we can’t do that because after the two years of PARCC, Massachusetts is switching to another test.

Were certain populations of students affected more than others by this?

Not to the extent we thought. The exceptions were at the bottom of the distribution for English Language Arts. Students who came in with lower scores tended to be the ones whose performance was measured to be even worse when they switched to online testing. The other groups most affected were the limited English proficiency and special education students.

Could some of it be their lack of experience or fluency with a computer?

Yes, that’s something we want to get at that we haven’t really had a chance to look at yet. There are these surveys administered by the state that ask about prior computer exposure that we haven’t gotten our hands on yet.

What are the implications of this for test-taking practice? Should we go back to paper? Should we do more training on the computer?

I think going back to paper is probably not going to happen, regardless. This is more a matter of: Here’s evidence and now we should think of ways that we should deal with this given that there’s all this momentum for switching to online testing. There are real advantages of online testing. The items are cheaper to grade. And they’re easier to deliver and transmit. And you get results back faster. And there probably are benefits to students being familiar with performing tasks on computers because that’s what the workplace is switching to anyway.

We can argue whether or not switching to online testing is good or bad, but it’s probably inevitable. So, the real question is: Given these differences, how should states or districts education agencies respond to this? And step one is at least acknowledging that there might be a difference.

What about implications for policy? Test scores figure into everything from teacher evaluations to real estate decisions to education reform evaluations.

Right, so if you have a policy where you’re trying to hold schools or teachers or students accountable and some schools are being tested using one measure and others are being tested by another measure, then the state or agency needs to be aware of these differences and have some sort of adjustment where there’s a fair comparison. It’s actually a difficult thing to do, which is why I’m interested to see the details of what Massachusetts is doing, because it’s not as simple as just saying, “Let’s set test scores, average test scores, equal in the paper districts and the online districts.”

This research looked at a state that is using the PARCC test. Can you extrapolate its finding to other assessments? For instance, NAEP scores have been flat for the past couple years as test takers switched to online testing. Can you draw a conclusion beyond PARCC to all standardized testing?

We wouldn’t really know whether the actual estimates of the mode effect translate to other tests, but we should at least say, there is the potential. And we should do rigorous testing where we’re administering online tests to some students and paper to some students.